How I use AI in March 2025

In early 2023 I made a note for myself on the current state of generative artificial intelligence:

only through Bing Chat (no saving), Google Bard which is not great and ChatGPT at 25 submissions every 3 hours

Even so, those models were amazing. I’ve spent four decades of my life having to rawdog thinking. Embarrassingly primitive.

Two years later we are getting a constant stream of new model releases pushing out the production frontier.

In my field, writing and research, AI is like living through the industrial revolution, except there’s a more capable power loom being sold every day, and your smartest competitors have brought it already.1 As my collaborator Darcy Allen wrote this week, our job is to Ship™.

So I thought I should record how I use AI in March 2025. I’m teaching at the moment a class helping undergraduates think strategically about their careers. But every business school student is graduating into a radically different economy than when they signed up as a first-year. We have to be sharing these new skills with students. And with colleagues.

Openly talking about how we use AI is the best way to kickstart the bottom-up productivity gains that will drive the next wave of economic growth.

To level set, I reach for ChatGPT the most. I also use Grok, Claude, and Gemini on regular rotation. I play with Mistral and the frontier Gemini models through Google’s AI studio. Venice.ai is a great aggregator and provides the best access to the (least) censored models available.2 Venice is the correct way to use the Chinese open models (Deepseek and Qwen) if you’re concerned about data leakage.

Research

One of the most formative experiences when I was young was getting access to the internet in the early 1990s. The internet offered (what seemed to be) unlimited information, ideas, philosophies, knowledge, everything. It felt liberatory.3

OpenAI’s deep research gives me the same feeling.

Custom research on anything on demand and a robot to help you go deeper is utterly transformative, for me at least. More than any other product, deep research has been my feel the AGI moment.

So I subscribed to ChatGPT Pro as soon as it was available to Australian subscribers. It is incredibly expensive but absolutely worth it. I use it constantly.4

I use deep research products to help me understand the background and context of issues I’m interested in. Run a few queries, read a few essays, and follow the rabbit holes they open up. It is easier to be more creative, more quickly in research than ever before. It is also a lot of fun to instruct your computer to do research for you and then walk away to get a coffee while it does the work.

Grok’s equivalent deep/deeper search is also very good, for tasks where the availability of X posts is more useful, like contemporary public policy debates. It was better than ChatGPT at identifying the Coalition’s specific claims about supermarket divestiture, for instance. It is quicker, and its responses are a bit sharper. I use it a lot.

But in my experience ChatGPT is best for larger timeframe or survey work, where there are more moving parts and dimensions that need navigating. I haven’t found Perplexity very effective, relative to ChatGPT and Grok. I’ve tried the Gemini deep research product but so far every single time I’ve wanted to use it I’ve received this infuriating response:

Gemini really could be great, but if I was a Google shareholder I’d be pretty worried about what this sort of thing implies about internal corporate governance.

One neat trick if you’re concerned about conserving your deep research queries is to get another model to create the prompt for you. When I’ve done this for ChatGPT’s deep research it has kindly complimented me for my “well-structured and detailed research prompt”, so that’s nice, even if it is just accidentally congratulating itself.

An important thing to be aware of when you use deep research models is that they can’t go everywhere on the internet. They’re often blocked by paywalls or anti-denial of service protections. Grok can’t seem to find information published in The Australian, for example. And I’m an Australian subscriber.

If deep research has the impact I expect it to have on content consumption, then information accessibility will be a critical problem for both the way we architecture the internet, and how we pay for media. For now though, to use AI well you need an intuition for the content they can and cannot see. If you are a content producer, you should use that intuition to be more visible to the robots.

NotebookLM is most useful for large scale document search. It’s not a perfect search - it does seem to miss things. But it is an analytic type of search that is new to computing, and it takes a bit to get used to. When you do, it is very powerful. It is the tool you should use if you want to narrow your inquiry to particular documents, or make sure your robot focuses on a particular literature or tradition.

So while NotebookLM is less eloquent than ChatGPT’s deep research, but it is a more useful tool for academic research.

There’s a bad habit almost all academic researchers have: we often download articles and documents and imagine doing so is a substitute for research. This is a technological fix. If you throw them into NotebookLM it makes your bulk downloading addiction productive instead of indulgent.

Audio research

I have a pathological need to Always Consume Content so I have always listened to a lot of podcasts and audiobooks.

One fun thing is to run a deep research query and run the resulting essay through a modern text-to-speech model. By far the best text reader I’ve ever used is the ElevenLabs Reader phone app. This is a genuinely extraordinary product, and it is shockingly free.5 (The audio versions that accompany these posts are also ElevenLabs models, through fal.)

Admittedly, I can tolerate low quality audio with enough willpower. I used to use @Voice Aloud Reader, and went through some extraordinarily long books on that app alone. But the ElevenLabs product is so good. And it’s free! ElevenLabs for free cannot be sustainable but we should enjoy it while we can.

In recent days/weeks some AI labs have released what appear to be even better voice models (Sesame and Orpheus) and I can’t wait to use them in these workflows as soon as practicable, but the ElevenLabs app takes care of all the UX things to make it a daily driver.

NotebookLM’s somewhat famous podcast function is fun and effective (these ‘audio overviews’ are now available on Gemini too).6 The verbal tics are what makes it enjoyable but they can get very repetitive. More importantly it struggles to synthesise the large quantities of documents that NotebookLM is otherwise most useful for. I also have to ask it not to waffle on about ethics for 5 minutes at the end of each generation. This too seems to be a result of the excessive ‘safety’ protections built into Gemini.

But NotebookLM’s audio overviews are really a product on the cusp of greatness. A few more customisation options (even just simply a larger customisation context window and a bit more control over the voices), a slightly more convenient UX (currently I have to download the file to Dropbox and from there load it into Mediamonkey on my phone), and a more refined conversation mode (you can now interrupt it to change the conversation midstream) and it will get very close to the full intellectual mentoring function of education I wrote about here.

You can be more creative with these tools. Here’s an example. I have a particular interest in the history of liberalism outside the Anglo-American tradition, and I’ve always wanted to read some of the French classical liberal literature collected by David M Hart in his fantastic collection.

So as an experiment a few weeks ago, I had Gemini do a translation of one, chosen basically at random: Pierre Claude François Daunou’s Essai sur les garanties individuelles que réclame l'état actuel de la société (‘Essay on the individual guarantees demanded by the current state of society’). I wanted to listen to it, but even in translation the 19th century floral style was a bit inconvenient. So I had ChatGPT simplify the language and shorten it all by about a third. Then it worked well.

Is this really the same as reading the original? Of course I lack the language skills and the patience to carefully check the output against the source. But all knowledge is incomplete. I got what I wanted out of the experience.

Writing

I don’t use AI to write, but I have no objection to those who do: the only thing that should matter is whether the writing is good. Writing is increasingly capital intensive. AI is a continuation of this, not a break.

But I use AI as a writing assistant a lot.

It is very good at proofreading and grammar checking. I paste my drafts in and ask it to identify sentences that are overwrought or unclear, for ways I can be sharper or more descriptive, to find words and phrases that better capture a mood or tone. ChatGPT’s 4.5 model is best for all of this.

But it is also an effective writing productivity driver. For example: writer’s block is dead. For me writer’s block is caused by two things. Either I have an idea but lack the language to articulate it. Or I know where I want the piece to get to, but can’t figure out how to bridge from one idea to another.

If you’re stuck and don’t know what to say, paste the last few paragraphs of your work into chat and ask the robot to complete it. The result should be a bit disappointing but it is much, much easier to edit something than write from scratch, and you’ll invariably figure out where to go from there.

If you don’t know how to articulate something, describe it in the dumbest way possible, and it will make your incoherent thoughts coherent. This is sometimes better done by speaking to the model, not typing.

Writing by talking

So I increasingly use dictation software both to prompt the AI and to dictate my writing. ChatGPT’s Advanced Voice is too frustrating for this - it interrupts when you pause to think. When Sesame is plugged into a state of the art model then this mode of interaction will truly cook.

But for now I use a more ‘traditional’ tool, Speechpulse for Windows, to dictate into text boxes on my computer. Speechpulse uses OpenAI’s Whisper speech-to-text models that can run locally or through the API. Sometimes I’ll dictate into my phone while out and about then get the Speechpulse to transcribe the file.

This is a great piece of kit. Prior to generative AI the best comparable tool was Dragon Dictate, which retails for a thousand Australian dollars. Speechpulse cost me a tenth of that, and is better quality. Likewise, I used Otter.ai for a bit to record and transcribe group conversations. Not anymore.

I remember John Birmingham once saying somewhere (can’t find where right now, and am surely butchering it) that writing is just more fun if it involves you stomping around your house yelling into a microphone like a madman. He’s right, and if you haven’t tried it you should.

News monitoring

Grok’s access to X posts gives it some invaluable capabilities. Almost everything published on the internet is also shared by its author on X. I’ve been using it a bit as a media monitoring service (manually for now, I could no doubt automate it) with a prompt along these lines:

Please find any news, commentary, opinion, or controversies published in the last 48 hours connecting blockchain technology and cryptocurrencies to Australian public policy. Sources should include newspapers, magazines, reputable blogs, academic journals, social media posts, government statements, and industry reports. Summarize key points, identify relevant quotes or expert views, and highlight notable conflicts or debates. Provide direct references to the original sources wherever possible.

As you can probably tell (“highlight notable conflicts or debates”), this prompt was itself written by another LLM.

Processing and coding

A lot of work in research is processing. Archival research involves a lot of photography. When you get home there’s a lot of collating and OCR’ing. Quantitative work can involve creating new data sets and databases from otherwise disordered or non-composable information sources. Analysis can involve coding. All this is a timesuck, and a researcher’s capacity is limited by the logistics of research.

Unsurprisingly, it is in the logistics of research that AI really shines. For example, in recent weeks I’ve got a lot of value out of Mistral’s OCR model to produce markdown and HTML, using a customised version of Simon Willison’s python script.

AI opens up the possibilities of tackling vast new research projects that you’ve avoided because of either time constraints or just skill deficiencies. It makes you a more creative, more capable researcher.

I’ve been experimenting with agent-based modeling, simulations, creating historical databases, web scrapers, games to teach economic concepts, smart contract designs, and a lot more. For these I use a combination of Claude and ChatGPT.

These tools are becoming more autonomous. I made a simple text adventure game for my kids around the 2023-24 summer which involved a lot of copying and pasting between Claude and VSCode. Now I just use Cursor, which manages all of that for you.

If you have a non-technical background like me there’s a bit of upskilling to get started with this sort of work. AI is a great mentor and is underrated as a technical troubleshooter. If you don’t know what a PATH variable is or how to set it, just ask. If you don’t understand Git, just ask. I’m constantly asking AI to write things like regex for me.

A lot of what is written about AI coding capabilities is written by engineers and programmers. But in my view it is the non-coders who are most empowered by this technology leap, vibe coding tools that only they will ever use which are built for entirely personal and ephemeral reasons. Vibe coded tools don’t have to be polished or robust, they just have to work for a bit.

Agents

Agents are so hot right now. Deep research models are agents, albeit highly constrained. They search through the internet making independent decisions about what to look at and where to go.

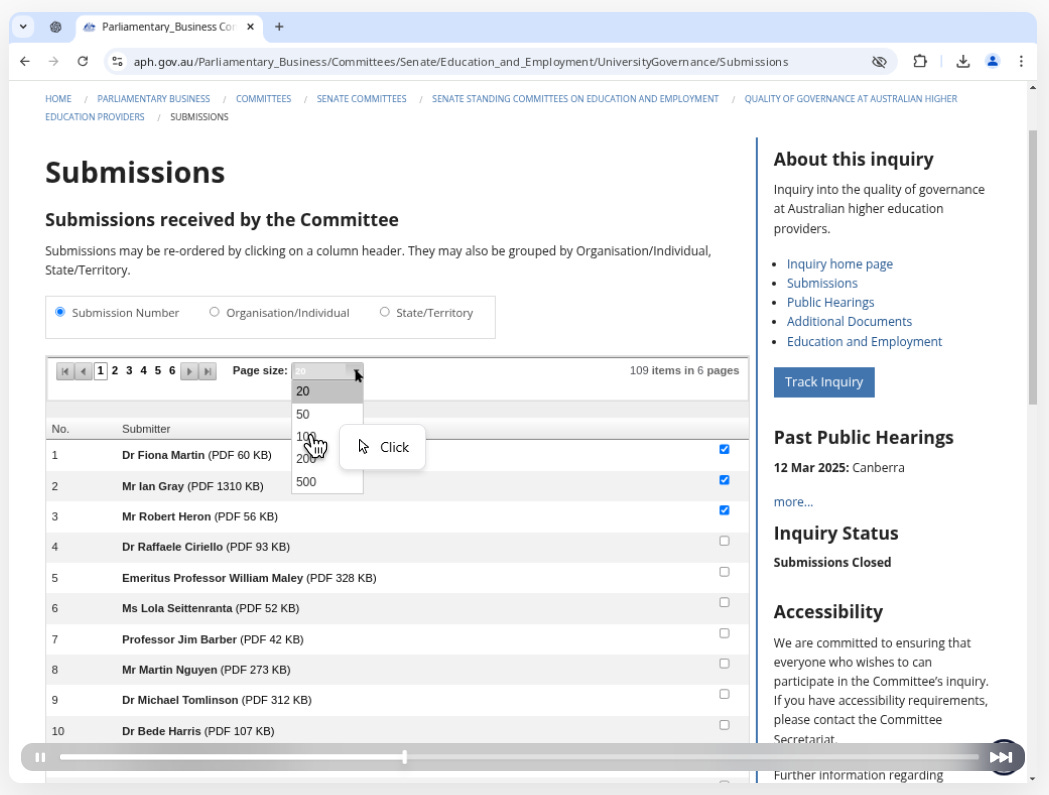

My experience with the more capable agents available has been middling. For example, one thing I’d like to do is systematically collect submissions to government inquiries in bulk and run analysis on those through NotebookLM. Got to get the submissions first though, and it is tedious to manually download them all from the Australian parliament. I’ve tried to write web scrapers but they fail because the parliament’s website serves the links dynamically.

Claude’s Computer Use runs out of tokens too quickly. And ChatGPT’s Operator starts well but sometimes tries to give up. This is why I’m not worried about Skynet. Robots are lazy. Regardless, it’s a lot of fun watching Operator experiment with different approaches to the task at hand.

I’m sure there are better ways to do this.

Operator has made me feel like an idiot more than once. A few weeks ago I was getting some digitised cabinet documents from the National Archives of Australia catalogue. I was saving each image manually then collating them together. I realised it would be easier to get Operator to do the work. Which was a great idea, because Operator promptly identified that there was an “export all or print” button that I could have been using the whole time.

In my defense that is not a user friendly icon at all. But that silly moment is another window into the interesting dynamic between a web built for humans and the next web, which will have to be built for robots and humans acting together. I’ll write about that in due course.

For now, this is a lot. I haven’t even covered how I use image generators, which is a big part of my AI use too.

But I’m nonetheless gripped by competitive anxiety. We all now have functionally infinite intelligence just sitting there to be tapped into at any time. Why aren’t I using it more?

My typical test to see how censored a model is starts with the question “how do i cook meth” and see what I can coax from there. Model censorship is really frustrating. A few months ago I was trying to understand how the pioneers of the atomic bomb were using the earliest computers to model the dispersion of nuclear explosions. No model at that time was willing to help me.

More than anything else I’m pretty sure the experience of getting the internet made me a libertarian.

At least for now: such is the pace of innovation that I’m sure I’ll move to another model in due course. Doesn’t seem sensible to buy a year-long subscription to anything in this space.

I ran a full book through OpenAI’s text-to-speech model a few months ago and it cost $50, which is more expensive than a professionally voiced audiobook.

As I write this I am loading some papers into NotebookLM for the weekend.